Brockman — whose company OpenAI is widely understood to be the most advanced AI project in the world — made these comments during a panel discussion that included Netanyahu, Elon Musk and MIT physicist Max Tegmark. As they gathered to discuss the future of AI, much of the conversation orbited around two possible futures: A future extinction of mankind, and a future “heaven” in which AGI eliminates poverty, hunger, and sickness and mankind merges with machines.

Brockman acknowledged the major risks in creating AGI but told the rest of the panel that it is this “heaven” that his company is working to bring about.

“I think this whole arc, in my mind, is all about paradigm shift, right?” Brockman said. “And I think that, even the question of what would that heaven, post-AGI, positive future look like? I think even that is hard for us to imagine what the true upside could be.”

Brockman went on to name one thinker, in particular, who he believes best understands the future of AI.

“The thinker who I think had the best foresight about how the AI revolution was going to play out is actually Ray Kurzweil,” he argued, with Musk immediately expressing his agreement.

Kurzweil, a Jewish author and inventor who currently works as a director of engineering at Google, is a famous transhumanist, most known for his books predicting “the Singularity.”

“His book Singularity is Near gets, like, a lot of shit,” Brockman went on to say.

“I think that people, you know, kind of assume it’s going to be this…religious text, but it’s instead a very dry, analytical text. And he just looks at the compute curves, and he says this is the fundamental un-locker of intelligence. Everyone thought that was crazy, and now it’s basically true — it’s basically common wisdom. And part of what he says is, ‘Look, what’s going to happen is in 2030 — first of all he says AGI: 2029.”

Here Musk again interjected: “Yeah. I keep telling people, it seems to be almost exactly right.”

“It’s spooky. It’s spooky,” Brockman continued. “2030 is when the merge happens. So we’ve got Neuralink coming. And uh, you know, maybe other systems like that. And what does it mean once you actually are kind of merging with an intelligence?”

While Musk said he believed his brain-computer interfaces — which are being developed through his company Neuralink — may not be quite ready for Kurzweil’s 2030 prediction, he agreed that humanity is on the doorstep of the “singularity.”

“You know, sometimes digital superintelligence is called, like, a singularity — like a black hole. Because just like with a black hole, it’s difficult to predict what happens after you pass the event horizon…” he said.

“And we are currently circling the event horizon of the black hole that is digital superintelligence.”

Alternative Video Sources:

]]>But even it if isn’t, the powers-that-be want it. They see it as the ultimate means to not only solve their problems, but also to offer a “god” that can be worshipped by the masses. AGI will be able to “solve” problems that cannot be solved today and that will greatly expand the burgeoning cult of AI worship.

Elon Musk and others have called for AI developers to tap the brakes. It’s not enough, but at least it’s something. At least some people are trying to be cautious. But Bill Gates is working on behalf of his Globalist Elite Cabal (often referred to as the New World Order) to push forward as quickly as possible. The sooner they can achieve and control Artificial General Intelligence, the faster they’ll be able to implement their plans of depopulation and total control.

If Bill Gates is definitely the bad guy for opposing a pause, does that mean Elon Musk is a good guy for wanting one? Not necessarily. I’d like to think Musk is being cautious as a smart man who’s not engaged with the Globalist Elite Cabal, but I’m just not sure. As I’ve said for the last year or so, he may be as he appears on the surface. He may also be a Trojan Horse who will guide the “red-pilled” population down the same path as the woke worshippers of people like Gates.

I’d put the chances of Musk being good or evil at about 50/50.

Here’s an article by Jason Cohen at Daily Caller News Foundation that breaks down the AI feud between the two billionaires…

Bill Gates Opposes Elon Musk’s AI Pause Plan, Says Technology’s Future Won’t Be As ‘Grim’ As Feared

![]() Microsoft founder Bill Gates advocated against a pause on artificial intelligence (AI) development, as proposed by billionaire Twitter owner Elon Musk and others, in a recent blog post.

Microsoft founder Bill Gates advocated against a pause on artificial intelligence (AI) development, as proposed by billionaire Twitter owner Elon Musk and others, in a recent blog post.

Gates wrote that society should refrain from attempting to halt the advancement of AI, seeming to reference a pause on AI experiments that technologists such as Musk have called for, according to the blog post he published. Further, he countered common predictions regarding the future impact of AI, asserting that it will not be as “grim” or as “rosy” as portrayed by some.

“We should not try to temporarily keep people from implementing new developments in AI, as some have proposed,” Gates wrote. Musk, AI researchers and prominent individuals such as Steve Wozniak suggested a six-month moratorium on massive AI experiments, emphasizing concerns such as job automation and propaganda, according to an open letter they published in March.

Gates addressed these concerns in his blog, but argued a pause is not ideal because continued development can help protect against the most dangerous outcomes for AI.

“Government and private-sector security teams need to have the latest tools for finding and fixing security flaws before criminals can take advantage of them,” Gates wrote. “Cyber-criminals won’t stop making new tools. Nor will people who want to use AI to design nuclear weapons and bioterror attacks. The effort to stop them needs to continue at the same pace.”

Chinese President Xi Jinping told Gates he is open to letting American AI companies such as Microsoft into China at a meeting in June, according to Reuters. The company is backing OpenAI, the creator of the popular chatbot ChatGPT, with billions of dollars according to Microsoft.

Musk announced the founding of an AI company on Wednesday. “Announcing formation of @xAI to understand reality,” he tweeted. He also added the company name to his Twitter bio.

“I think we should be cautious with AI, and I think there should be some government oversight because it is a danger to the public,” Musk told Daily Caller co-founder Tucker Carlson in an April Fox News interview.

The Gates Foundation did not immediately respond to the Daily Caller News Foundation’s request for comment.

All content created by the Daily Caller News Foundation, an independent and nonpartisan newswire service, is available without charge to any legitimate news publisher that can provide a large audience. All republished articles must include our logo, our reporter’s byline and their DCNF affiliation. For any questions about our guidelines or partnering with us, please contact [email protected].

]]>The reasons for this are many, not the least of which being how easy it is to get enamored by the problem-solving nature of AGI. But with any technological advancement that was intended to make life better, there are always unintended consequences. With AGI, the consequences are far greater than anything we’ve seen.

Let’s look at the example of the internet. Did the internet fulfill its promise of connecting the world? Yes, for the most part. Did it also create a western society that has devolved in nearly every cognitive way as a result to so much access to instant gratification? Absolutely. Has it been turned into safe haven for depravity and crime? Absolutely. As unpopular as it might be to say, I would argue that people were more self-sufficient and society was more moral before the internet changed the world.

AGI’s consequences will be far worse. Keep that in mind as you read this informative piece that leans a little too much in favor of AGI for my liking. I’m publishing it as is, not because I like how it paints AGI but because I trust my audience to be discerning with the information…

When the latest iteration of generative artificial intelligence dropped in late 2022, it was clear that something significant had changed.

The language model ChatGPT reached 100 million active monthly users in just two months, making it the fastest-growing consumer application in history. Meanwhile, Goldman Sachs predicted that AI could add 7% to global GDP over a 10-year period, almost $7 trillion, but also replace 300 million jobs in the process.

But even as AI continues to disrupt every aspect of life and work, it’s worth taking a step back.

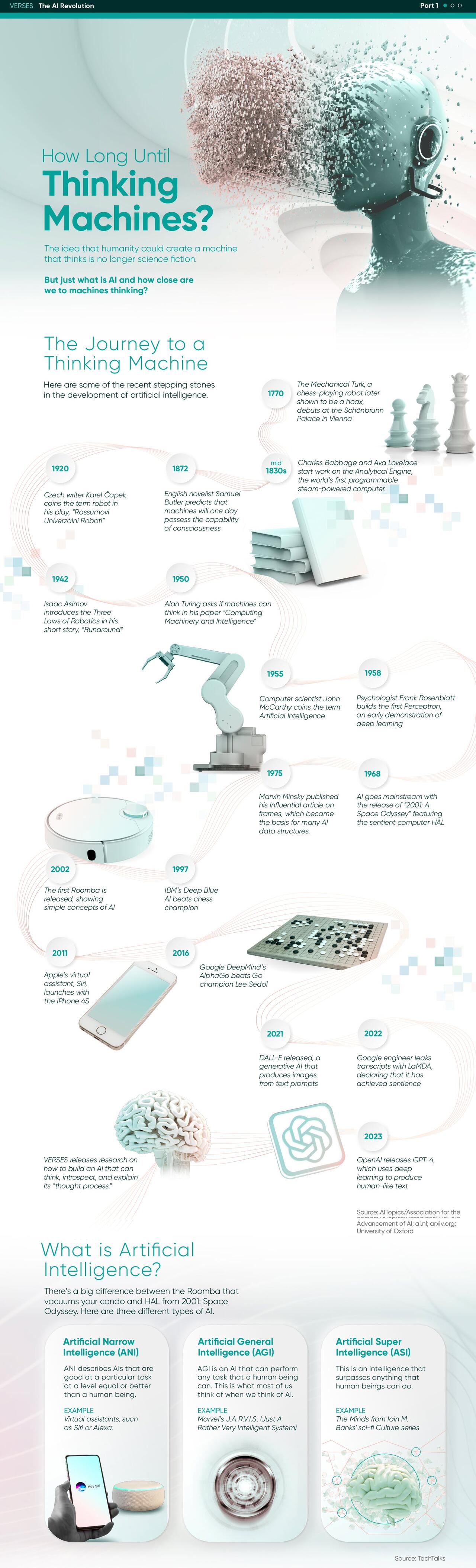

In this visualization via Visual Capitalist’s Chris Dickert and Sabrina Fortin, the first in a three-part series called The AI Revolution for sponsor VERSES AI, we ask how we got here, where we’re going, and how close are we to achieving a truly thinking machine?

Milestones to Mainstream

The term “artificial Intelligence” was coined by computer scientist John McCarthy in 1955 in a conference proposal. Along with Alan Turing, Marvin Minsky, and many others, he is often referred to as one of the fathers of AI.

Since then, AI has grown in leaps and bounds. AI has mastered chess, beating Russian grandmaster and former World Chess Champion Garry Kasparov in 1997. In 2016, Google’s AlphaGo beat South Korean Go champion Lee Sedol, 4-1. The nine-year gap in achievements is explained by the complexity of Go, which has 10360 possible moves compared to chess’ paltry 10123 combinations.

DALL-E arrived in 2021 and ChatGPT-4 in early 2023, which brings us to today.

But What is Artificial Intelligence?

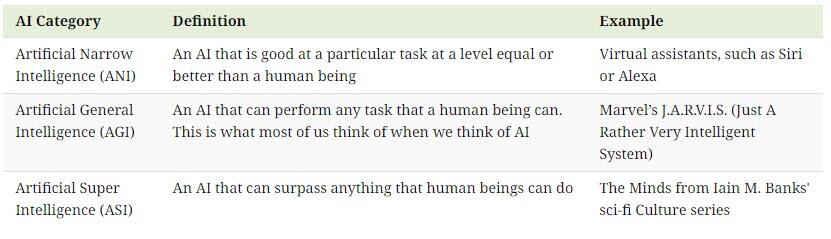

There’s a big difference between the Roomba that vacuums your condo and HAL from 2001: Space Odyssey. This is why researchers working in the field have come up with the following ways to classify AI:

Despite a false alarm by one Google software engineer in 2022 and a paper by early GPT-4 boosters, no one really believes that recent generative AIs qualify as thinking machines, however you define it. ChatGPT, for all its capabilities, is still just a souped-up version of autocomplete.

Do Androids Dream of Electric Sheep?

That was the title of Philip K. Dick’s science fiction classic and basis for the movie Blade Runner. In it, Harrison Ford plays a blade runner, a kind of private investigator who used a version of the Turing Test to ferret out life-like androids. But we’re not Harrison Ford and this isn’t science fiction, so how could we tell?

People working in the field have proposed various tests over the years. Cognitive scientist Ben Goertzel thought that if an AI could enroll in college, do the coursework and graduate, then it would pass. Steve Wozniak, co-founder of Apple, suggested that if an AI could enter a strange house, find the kitchen, and then make a cup of coffee, then it would meet the threshold.

A common thread that runs through many of them, however, is the ability to perform at one thing that humans do without effort: generalize, adapt, and problem solve. And this is something that AI has traditionally struggled at, even as it continues to excel on other tasks.

Can Current State-of-Art AI Achieve Thinking Machines?

And it may be that the current approach, which has shown incredible results, is running out of road.

Researchers have created thousands of benchmarks to test the performance of AI models on a range of human tasks, from image classification to natural language inference. According to Stanford University’s AI Index, AI scores on standard benchmarks have begun to plateau, with median improvement in 2022 limited to just 4%.

New comprehensive benchmark suites have begun to appear in response, like BIG-Bench and HELM, but will these share the same fate as their predecessors? Quickly surpassed, but still no closer to an AI like J.A.R.V.I.S. that could pass the Wozniak Coffee Test?

Imagine a Smarter World

VERSES AI, a cognitive computing company specializing in next generation AI and the sponsor of this piece, may have an answer.

The company recently released research that shows how to build an AI that can not only think, but also introspect and explain its “thought processes.” Catch the next part of The AI Revolution series to learn more.

]]>